Using Machine Learning to Predict Rudder and Boom Position of a Sailing Dinghy

In a recent article we covered how Artificial Intelligence (AI) can be used in sailing. In this article we illustrate how artificial intelligence (AI) and machine learning can be applied in dinghy sailing more specifically. One of the potential use cases includes the prediction of the rudder and boom displacement from videos and images of a sailing dinghy. Such a model can be useful in the context of sailing instruction and could potentially help to improve sailing performance on a more professional level. If a software knows the boom and rudder position of a sailing dinghy it could instruct a sailing student how to behave in certain situations (e.g. to keep the rudder straight). By analyzing videos of dinghy sailors one could make statements why certain behaviour leads to better performance and outcomes such as a specific rudder movement.

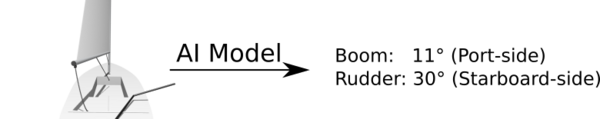

The basic idea is that you have an image of a sailing dinghy taken from the stern of the dinghy. The image should cover the whole sailing dinghy and sailor, especially the boom and tiller. By feeding the image into a machine learning model the angle for both, rudder and boom displacement is predicted.

In this article I would like to cover some of the basics on how machine learning can help to infer rudder and boom position from images. I will also explain our machine learning approach and why simulated data was used to train the AI model instead of real sailing images.

Predicting object orientation, angles and dimensions from images

When looking at the state of art in computer vision, most research, applications and libraries typically focus on image classification, object detection and mask segmentation. Whilst image classification allows you to predict a class (label) of the image, object detection returns bounding boxes along with appropriate class labels.

However, in the use case mentioned above I would like to get a number for the rudder or boom displacement (angle) which is typically a regression problem.

Treating it as a classification problem

One of the possible approaches to detect rudder and boom position is to treat the problem as a classification problem. You could got through a set of images that show sailing dinghies and put a class label on it, such as whether the dinghy is on a port or starboard tack. This would basically be a classification problem with 2 different classes. Once the model is trained, it would return the probabilities of a image showing a sailing dinghy either on a port or starboard tack.

The issue is that we don't know whether the dinghy is sailing close to the wind or rather bearing away, we would only get the tack but no further information. Of course we could create more than two classes and use bins to indicate different points of sail.

Using a single stage regression network

We have stated above that predicting quantities is typical for a regression problem. There are regression networks available that take images as an input and return a number, such as convolutional networks (CNN) that are used to predict image orientation for instance. However, coming up with a suitable model architecture and training of such model requires a certain time and effort. It might also be challenging to debug and interpret such model.

Using a two stage approach to predict rudder and boom displacement

In our approach we decided to use a two stage approach to predict rudder and boom displacement.

The first stage consists of a keypoint detection model that can be used to predict keypoints such as rudder location, gooseneck, end of the boom, etc. The keypoint detection model would return the coordinates (x and y value) of certain dinghy parts in the image.

The second stage are two simple random forest regressors that takes as input the coordinates from the first stage and return angle for rudder and boom displacement respectively.

Using a two stage approach allows us to check the intermediate result which is the detection quality of the keypoint detector. We can then individually optimize the two stages by trying out different approaches.

Data acquisition

By design machine learning models require data as input. Here we focused on supervised machine learning where data needs to be labeled which means that ground truth (actual rudder and boom displacement) is required.

There might be plenty of images of sailing dinghies available and it is also easy to take pictures of sailing dinghies, either from another boat or through some camera that is mounted on the sailing dinghy. However, the issue is that with such setup we cannot get the actual value of the rudder and boom displacement. It might be possible to estimate but this might not be practical if a larger number of images is used.

One possible solution is to install sensors on the sailing dinghy that record boom and angle displacement. An example for such devices are angle sensors. However, it takes time to install such sensors on a dinghy, they need to be powered somehow and might be exposed to water which could cause malfunction.

Using Blender to simulate dinghy images

Instead of using actual images of a sailing dinghy and record boom and rudder displacement we decided to use simulated images instead. For this approach we are using Blender which is a free and open-source 3D computer graphics software. With Blender you can easily create a 3D scene consisting ob various elements and objects. After adding light sources and a camera, you can render the scene and get a 2D image.

Our approach with Blender was to use Python to automate the process of creating a sailing dinghy for a given boom and rudder displacement. This would allow us to automatically create a large number of images for various boom and rudder displacement and adding some variation in camera position, dinghy dimensions and light conditions. The Python code would basically create the hull, mast, boom, sail, rudder and tiller. The color, rudder displacement, boom displacement and certain dimensions would be function arguments that can be easily adjusted.

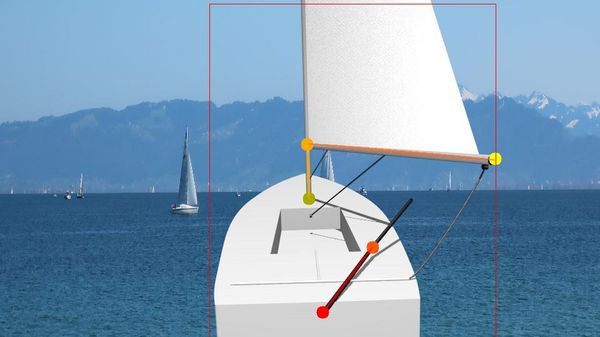

The following image shows an example of how such a simple, rendered dinghy model looks like:

Data augmentation

In order to add variation to the training data we use several data augmentation techniques. In the context of machine learning, data augmentation can help to avoid overfitting which allows for better generalisation.

To get more realistic images as input for the machine learning model, the dinghy model was placed on a suitable background scene. We would also add some random rotation to cover heeling of the boat. In addition to that, data augmentation libraries were used to add variation in weather (e.g. sun, rain and fog), brightness and contrast.

Using Pytorch to train a Keypoint Detection Model

Pytorch is a open-source machine learning library that is popular in the domain of computer vision. The library already has an implementation for a keypoint R-CNN Detector model which is quite popular for human pose estimation. Training of the pytorch keypoint R-CNN model on a custom dataset was relatively straight forward and consisted of the following steps:

First a suitable dataset and data loader were created that would load the augmented images along with the annotations from disk and return required data using the torch Tensor data type. Data was separated into a training and test set. The training data set is used to train the model whilst the performance of the model was evaluated on the test set. We trained with a single class for the sailing dinghy. In the past we have seen that the pytorch keypoint R-CNN detector was trained on custom data using class label 0 which in the end resulted in the keypoints loss being zero ("loss_keypoint": 0). The reason for this is that class label 0 is reserved for the background class.

Next, the implementation of the keypoint R-CNN model in pytorch was used and tailored to the use case by specifying a suitable backbone, number of keypoints and trainable layers of the network.

Training was done on a GPU and checkpoints of the model were serialized to disk so that the model can be used later on in the inference pipeline. When running the model on one of the augmented images it would basically predict the location of the keypoints which is shown in the following image:

Please have a look at our recent article on training a keypoint detection model if you are interested in more details.

Using scikit-learn to train a Random Forest Regressor

Once the keypoints are detected in the image, the next step is to predict the actual boom and rudder displacement from their coordinates. A Random Forest Regressor from the scikit-learn library is used to accomplish this task. Input for the regressor are the coordinates of the keypoints and the output is the angle. We train two models separately, one to predict the rudder position and the other one to predict the boom displacement.

As a pre-processing step, absolute x- and y-coordinates of the keypoints are replaced by their relative difference and normalized in the following step. For the training process data is split against into a train and test set. The following chart shows the relation between actual displacement and the predicted values from the test set:

Outlook and further improvement

Currently we are working together with a number of dinghy sailors to test and evaluate our model on their data. Our goal is to cover a broad range of sailing dinghies with good accuracy. Please do not hesitate to contact us if you wish to try out the model on your data.

On the technical side we are planning a number of changes to improve model performance:

- For a fixed camera setup we intend to predict certain locations rather on a per video sequence than on a per image basis. Certain parts of the boat that do not move relative to the camera should always have the same position in all the images for a single setup.

- Rudder and boom displacement also need to be consistent in time. There is now way of going from a starboard to a port tack without having the boom swinging across. By predicting displacement for a sequence of images rather than on single images accuracy could be potentially further increased.

If you are interested in our AI model, the process of training a keypoint detection model or in case of any other inquiry, please do not hesitate to contact us.